Recursion: The Foundation of Functional Iteration

This chapter delves into some deep foundational concepts of functional programming theory, including potentially advanced topics like fixed-point combinators discussed later. These aspects can go beyond everyday practical application and might feel challenging.

Beginners, in particular, should not feel obligated to grasp every theoretical detail immediately. If you find parts of this section difficult or overly abstract, please feel free to skim or skip ahead to the next practical topic.

In the previous section, we saw how functional programming tackles iteration using higher-order functions (HOFs) like fold (or reduce) and sequence generation functions like unfold, often combined elegantly with pipelines. This approach focuses on what transformation to apply to a collection or sequence.

While the previous section focused on HOFs for iteration, this section explores Recursion as the fundamental mechanism in functional programming for achieving repetition, mirroring imperative loops.

The core idea underlying recursion is self-reference —the concept of something referring to or containing itself—and a function calling itself is just one common implementation of this principle.

The Concept of Self-Reference

Section titled “The Concept of Self-Reference”This self-referential nature appears in many domains:

- Mathematical Definitions: For example, the Fibonacci sequence is defined as F(n) = F(n-1) + F(n-2), defined by its own previous values.

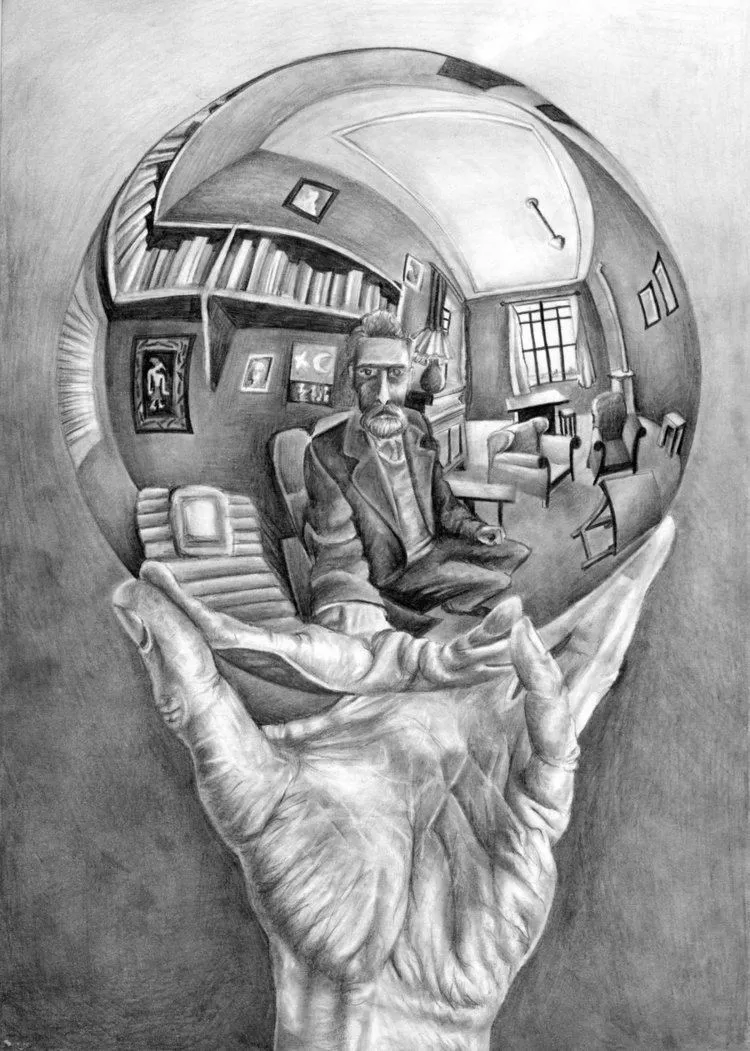

- Art: Nested structures like those in Escher’s work, where an image contains a smaller version of the same image.

- Linguistics: Self-referential paradoxes like “This statement is false.”

- Computer Science: Recursive functions in programming, and data structures like linked lists and trees where nodes refer to other nodes of the same type.

- Fractals: Self-similar shapes where parts resemble the whole structure.

Hand with Reflecting Sphere, also known as Self-Portrait in Spherical Mirror by M. C. Escher

Hand with Reflecting Sphere, also known as Self-Portrait in Spherical Mirror by M. C. Escher

Viewing recursion through the lens of self-reference allows us to understand it as a more universal, even philosophical concept that transcends mere programming techniques. The act of referring to oneself holds both infinite possibilities and potential contradictions, which contributes to the depth and fascination of recursion.

Recursion: The Functional Loop

Section titled “Recursion: The Functional Loop”With the concept of self-reference in mind, let’s look at its application in programming. In functional programming, where mutable state and explicit loop constructs (for, while) are often avoided, recursion – a function calling itself – becomes the primary tool for performing repetitive tasks. Any computation that can be done with an imperative loop can also be done with recursion.

Let’s revisit the task of summing integers from 1 up to n. Imperatively, we use a loop with an accumulator. Functionally, we can use recursion:

// Naive recursive sum: Sum integers from 1 to nlet rec sumUpTo n = // Base case: When n is 0 or less, the sum is 0. if n <= 0 then 0 // Recursive step: Sum is n plus the sum up to n-1. else n + sumUpTo (n - 1)

let resultNaive = sumUpTo 5// 5 + 4 + 3 + 2 + 1 = 15printfn "Naive recursive sum up to 5: %d" resultNaiveNotice the rec keyword before let. This explicitly tells the F# compiler that sumUpTo is a recursive function, allowing it to call itself within its definition. The function has two parts:

- Base Case: A condition (

n <= 0) that stops the recursion. Without a base case, the function would call itself infinitely (much like infinite reflections, but computationally problematic!). - Recursive Step: The function calls itself with a modified argument (

n - 1) that moves closer to the base case (like looking at the next smaller reflection in the mirror analogy).

This structure directly implements the looping logic: the recursive step repeats the core logic (adding n), and the base case terminates the loop. In functional programming, loops are recursion.

The Stack Problem and Tail Call Optimization (TCO)

Section titled “The Stack Problem and Tail Call Optimization (TCO)”The naive recursive approach above has a potential problem. Each time sumUpTo calls itself, a new frame is pushed onto the call stack to store intermediate information. For large values of n, this can lead to a stack overflow error.

Imperative loops don’t typically suffer from this because they reuse the same stack frame. How can functional languages use recursion for general-purpose loops? The answer is Tail Recursion and Tail Call Optimization (TCO).

A function call is in tail position if it’s the very last action performed by the containing function before it returns. A recursive call in tail position is called tail recursion.

// Tail-recursive sum: Sum integers from 1 to nlet sumUpToTailRecursive n = // Inner helper function 'loop' is tail-recursive let rec loop accumulator currentN = // Base case: When currentN reaches 0, return the accumulated sum. if currentN <= 0 then accumulator // Recursive step: The recursive call is the *last* thing done. else // Add currentN to accumulator, decrement currentN for the next call. loop (accumulator + currentN) (currentN - 1)

// Start the loop with initial accumulator 0 and initial n. loop 0 n

let resultTailRec = sumUpToTailRecursive 5 // 15printfn "Tail recursive sum up to 5: %d" resultTailRec

// This can handle large numbers without stack overflow due to TCO// let largeSum = sumUpToTailRecursive 100000// printfn "Tail recursive sum up to 100000: %d" largeSum // Works fineIn sumUpToTailRecursive, the actual recursion happens in the inner loop function. Crucially, the recursive call loop (accumulator + currentN) (currentN - 1) is the very last operation in the else branch. There’s no calculation (like n + ...) waiting to happen after the recursive call returns.

F# (and many other functional languages) performs Tail Call Optimization (TCO). When the compiler detects a tail-recursive call, it compiles it not as a standard function call (which pushes a new stack frame) but as an efficient jump, similar to how an imperative loop works.

This means tail-recursive functions can run indefinitely without consuming stack space, making them a viable and efficient way to implement any kind of loop.

Higher-Order Functions: Abstracting Recursion

Section titled “Higher-Order Functions: Abstracting Recursion”Now that we understand recursion as the fundamental looping mechanism, we can see Higher-Order Functions (HOFs) like map, fold, and filter in a new light: they are abstractions over common recursive patterns.

Consider List.fold. Its purpose is to process a list and accumulate a result. This is a very common pattern, implemented recursively like this (conceptual example):

// Conceptual recursive implementation of foldLeftlet rec foldLeftRecursive f acc list = match list with | [] -> acc // Base case: empty list, return accumulator | head :: tail -> // Recursive step: process head, recurse on tail let newAcc = f acc head // Apply the combining function foldLeftRecursive f newAcc tail // Tail recursive callThe List.fold HOF captures this exact pattern. Instead of writing the recursion manually, you provide the combining function (f) and the initial accumulator (acc), and fold handles the recursive traversal for you.

// Using List.fold with the pipeline operatorlet sumUsingFold = [1; 2; 3; 4; 5] // Start with the list |> List.fold (+) 0 // Pipe it into List.fold with the operation and initial value

// (+) is the combining function 'f'// 0 is the initial accumulator 'acc'// The list [1..5] is piped in as the last argumentprintfn "Sum using fold with pipeline: %d" sumUsingFold // 15Using fold (especially with the pipeline) is more declarative – you specify what operation to perform (+) rather than how to iterate recursively.

Similarly:

List.mapabstracts the recursive pattern of applying a function to each element of a list and building a new list.List.unfoldabstracts the recursive pattern of generating a sequence based on a state and a termination condition.

Under the Hood: Recursion vs. Loops in HOFs

Section titled “Under the Hood: Recursion vs. Loops in HOFs”While HOFs conceptually abstract recursive patterns, it’s important to know that their internal implementation in standard libraries (like .NET’s Base Class Library used by F#) might not always be purely recursive F# code.

For performance reasons, especially when operating on data structures like arrays or interacting with underlying platform features, library functions like List.map or Seq.fold might be implemented internally using optimized, low-level imperative loops (while, for) written perhaps in C# or directly manipulating internal data structures.

Does this invalidate the functional approach? Not at all. The key is that the external interface and the semantic behavior of these HOFs remain purely functional. They take functions as arguments, operate on data immutably (returning new collections instead of modifying old ones), and hide the imperative implementation details. They provide a functional abstraction layer, regardless of whether the lowest-level implementation uses recursion or an optimized imperative loop. This allows programmers to benefit from functional composition and reasoning while leveraging efficient underlying implementations.

Recursion for Recursive Problems

Section titled “Recursion for Recursive Problems”While HOFs are excellent for abstracting common iteration patterns over linear structures like lists or sequences, direct recursion remains the most natural and often clearest approach for problems that are inherently recursive in nature.

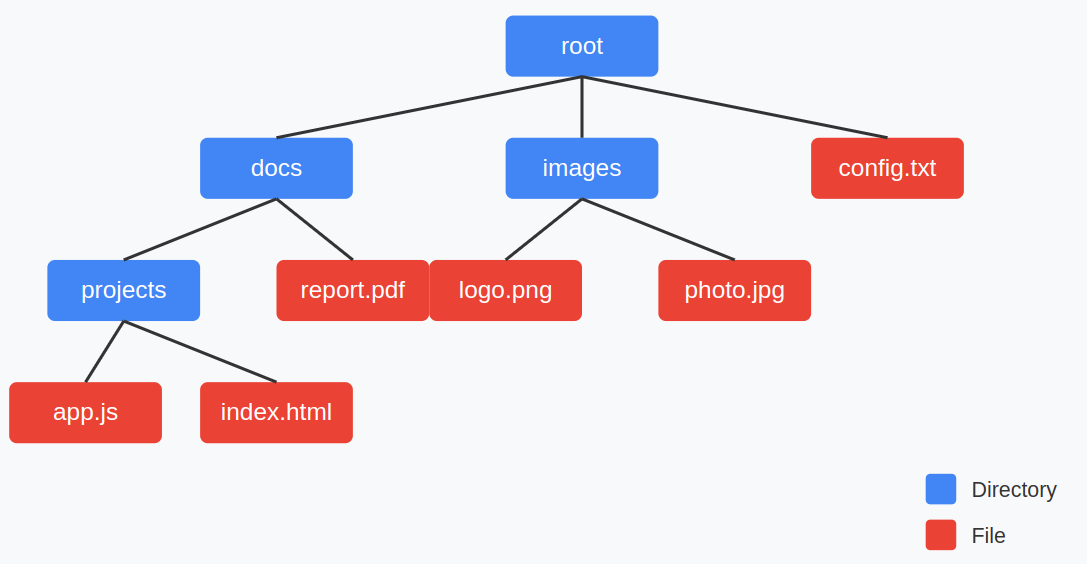

A classic example is processing tree-like data structures. Consider traversing a file system directory structure:

- To process a directory, you need to process each file within it.

- You also need to process each subdirectory within it.

- Processing a subdirectory is the same problem as processing the top-level directory, just on a smaller scale.

This self-similar structure screams recursion:

// Conceptual example: Processing a directory treetype FileSystemItem = | File of name: string | Directory of name: string * items: FileSystemItem list

let rec processItem item = match item with | File (name) -> printfn "Processing file: %s" name // Perform action on the file | Directory (name, items) -> printfn "Entering directory: %s" name // Perform action on the directory itself (optional) items |> List.iter processItem // Recursive call for each sub-item! printfn "Exiting directory: %s" name

// Trying to express this cleanly with only standard HOFs like fold or map// can be much more complex and less intuitive than direct recursion.Other examples include parsing nested structures, evaluating mathematical expressions represented as trees, certain sorting algorithms (like Quicksort or Mergesort), etc. In these cases, the recursive structure of the function directly mirrors the recursive structure of the data or the problem, leading to elegant and understandable code.

Recursion vs. HOFs: Choosing the Right Tool

Section titled “Recursion vs. HOFs: Choosing the Right Tool”So, when should you use direct recursion, and when should you prefer HOFs? Here’s a pragmatic guide:

- Prefer HOFs for Standard Patterns: If you are iterating over a list/sequence/array to transform elements (

map), select elements (filter), or accumulate a result (fold), use the corresponding HOF. They are more declarative, generally less error-prone (no need to manage base cases and recursive calls explicitly), and clearly express the intent. - Critique of Misplaced Recursion: Be wary of using recursion just for show, especially for tasks that are perfect fits for HOFs. For instance, summing the numbers 0-5 using manual recursion, as sometimes presented in introductory FP texts, is arguably less clear and less idiomatic than using

List.fold (+) 0 [0..5]orList.sum [0..5]. The core of that problem is folding (combining elements with an operation), sofold(orsum) is the right abstraction. Using recursion there doesn’t necessarily showcase FP’s strengths better than using the appropriate HOF. Choose the tool that best fits the problem’s essence. - Use Recursion for Non-Standard or Recursive Problems: If the iteration pattern doesn’t neatly fit a standard HOF, or if the problem/data structure is inherently recursive (like trees), then direct recursion (especially tail recursion) is the appropriate tool.

- Understand the Problem Deeply: Only opt for direct recursion (especially complex recursion) if you deeply understand the recursive structure of the problem you are solving and are confident in your implementation (base cases, recursive steps, termination). Otherwise, stick to HOFs where possible.

Self-Reference Without Self-Reference: Recursion and Turing Completeness

Section titled “Self-Reference Without Self-Reference: Recursion and Turing Completeness”The concept of “Self-Reference Without Self-Reference” represents one of the most fascinating aspects of theoretical computer science and functional programming. At its core, it demonstrates something profound: recursive behavior can be achieved without explicit recursive definitions in the language. This has deep implications for understanding the fundamental power of computation.

The Challenge in Pure Functional Models

Section titled “The Challenge in Pure Functional Models”In the lambda calculus (the theoretical foundation of functional programming), there initially appears to be no direct way to define a recursive function because a function cannot refer to itself within its own definition using its name. This limitation would seemingly make it impossible to implement loops or recursive algorithms, essential components for general-purpose computation.

If a computational model cannot inherently express repetition (loops or recursion) and conditional branching (selection), it cannot solve all computable problems. The ability to solve any problem solvable by a theoretical Turing machine is known as Turing completeness.

Achieving Repetition: The Role of Fixed-Point Combinators

Section titled “Achieving Repetition: The Role of Fixed-Point Combinators”However, fixed-point combinators, such as the Y combinator, demonstrate that this initial intuition about lambda calculus’s limitations is incorrect. They provide a mechanism to achieve recursion using only the core features of lambda calculus: function definition (lambda abstraction) and function application.

The Y Combinator: Self-Reference Through Indirection

The Y combinator achieves self-reference without direct naming through clever use of higher-order functions:

// Note: The 'rec' here is for F#'s definition, but the *body*// of Y and its application below don't rely on direct recursion.let rec Y f = (fun x -> f (fun y -> x x y)) (fun x -> f (fun y -> x x y))This function doesn’t contain explicit recursion in the logic it enables. It creates a structure where:

- A function

f(representing the recursive step) receives a way to call itself (fun y -> x x y) as an argument. fcan then invoke this argument to achieve the next step of the recursion.- The Y combinator orchestrates this self-application (

x x) automatically.

Practical Implementation in F#

Let’s examine a factorial function using the Y combinator. The factorial logic itself (fun self -> fun n -> ...) doesn’t use let rec or refer to factorial:

// Define the Y combinator (as above)let rec Y f = (fun x -> f (fun y -> x x y)) (fun x -> f (fun y -> x x y))

// Define the *logic* of factorial without direct recursionlet factorialStep = fun self -> // 'self' will be the function to call recursively fun n -> if n = 0 then 1 else n * self (n - 1) // Call the recursively passed 'self'

// Create the recursive factorial function using Y combinatorlet factorial = Y factorialStep

// Usageprintfn "%d" (factorial 5) // Outputs: 120Notice how the factorialStep function receives self as an argument, representing the function itself, enabling the recursive call self (n - 1). The Y combinator provides the mechanism to make this self argument correctly refer back to the function being defined.

Pure Lambda Calculus Implementation

In pure lambda calculus terms, without any language-specific keywords like rec, the Y combinator is:

Y = λf.(λx.f (x x)) (λx.f (x x))This pure expression demonstrates that recursion is inherent in the mechanism of functional abstraction and application.

Implications: Functions, Recursion, and Turing Completeness

Section titled “Implications: Functions, Recursion, and Turing Completeness”The existence of fixed-point combinators like Y is theoretically crucial because it proves that repetition (recursion/loops) can be achieved using only functions.

For a system to be Turing complete, it generally needs:

- Selection (Conditional Branching): The ability to perform different actions based on conditions. In lambda calculus, this is achieved by encoding boolean values and

if-then-elselogic as functions (e.g., Church booleans). - Repetition (Iteration/Recursion): The ability to repeat actions. As demonstrated, fixed-point combinators provide this capability using only functions.

Since lambda calculus can express both selection and repetition using only its fundamental building blocks (abstraction and application), it is Turing complete.

This means:

- Recursion is not a primitive feature that must be added to a language; it can emerge from simpler functional concepts.

- Looping constructs can similarly be derived.

- The expressive power of pure functions is sufficient for universal computation.

Functional languages like F# inherit this power. While they provide convenient syntax like let rec for defining recursive functions directly, the underlying theoretical foundation guarantees that even without such syntax, recursion (and thus any computable algorithm) could still be expressed using techniques like the Y combinator.

The Y combinator isn’t just a clever trick—it’s a profound demonstration that self-reference, recursion, iteration, and ultimately Turing completeness are inherent properties of the functional paradigm based on lambda calculus.

Summary

Section titled “Summary”- Recursion is the fundamental mechanism for implementing loops and iteration in functional programming, replacing imperative

forandwhileloops. In FP, loops are recursion. - Tail Call Optimization (TCO) is crucial for making recursion efficient for general-purpose loops in languages like F#, preventing stack overflows.

- Higher-Order Functions (

map,fold, etc.) abstract common recursive patterns, leading to more declarative and often safer code. - While HOFs conceptually abstract recursion, their low-level implementation in libraries might use optimized imperative loops for performance, but their functional interface is maintained.

- Direct recursion remains the best tool for inherently recursive problems (e.g., tree structures).

- Choose wisely: Prefer HOFs for standard iteration patterns; use recursion when the problem structure demands it or when HOFs are awkward. Avoid using recursion just because you can when a standard HOF is a better fit.